Project Overview:

In this project, my team programmed a Rethink Baxter robot to classify and pick bottles and cans then drop them into separate recycling bins. We used OpenCV to detect and locate the randomly placed bottles and cans, while for the robotic manipulation we utilized MoveIt!. Take a look at the code on my GitHub. (Note: the GIF above is playing at 2x speed)

Below is a link to a video (4x speed) of the project. The video starts by showing the bottles and cans being placed, then a quick view of the computer vision, and lastly it displays two different views of the robot in action.

MoveIt!

The robot operation was controlled entirely through ROS’s MoveIt! library for motion planning and manipulation (mainly the compute_cartesian_path command). After initializing the Move Group Commander for Baxter’s right arm, a table is added to the Planning Scene to ensure that the robot does not collide with the table.

The arm then moves to a position out of the camera’s field of view and calls the camera’s classification service. Once the objects have been located and classified, the arm moves to a predetermined home position over the objects to ensure smooth and predictable motion of the arm (This home configuration was determined after testing).

Computer Vision

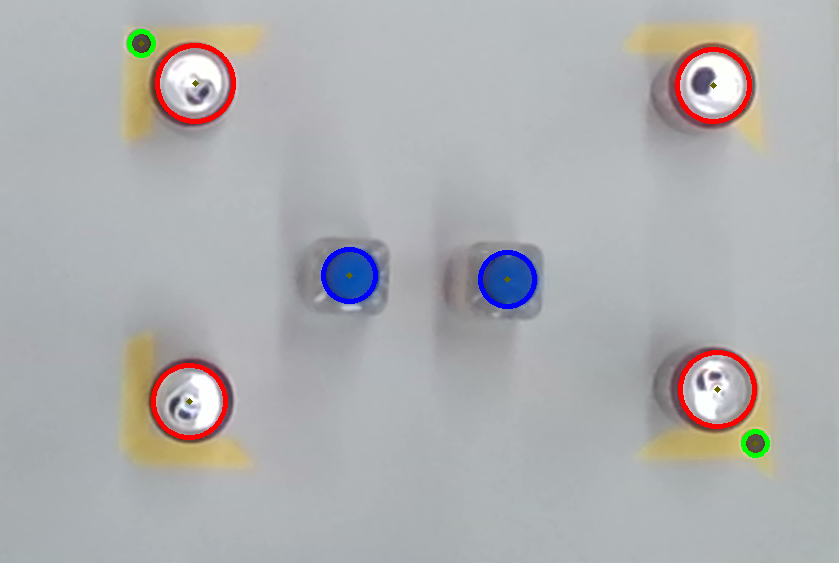

A Realsense camera was used to take real-time video of the objects placed in front of the robot. After calibrating the camera, we used OpenCV and specifically Hough Circles to identify, locate and classify the bottles and cans. The camera classification service returns a list with the position and classification of all bottles and cans in the camera’s view.

Action Sequence

- Baxter’s right arm moves to the home position where the robot is safely above all objects.

- A livestream of the camera opens that captures, classifies and locates all objects on the table. (For this project, we only have bottles and cans)

- For an object on the table, the robot’s head will display either the can image or the bottle image, depending on the classification.

- Next, Baxter’s right arm will move to object’s (x,y) coordinate at a safe z height away. This is the same height for bottles and cans.

- The arm then moves down to the appropriate perch height, depending on classification. (For example, the robot arm will be position further away from the table for bottle, since those are taller than cans).

- Once safely at the perch height, the ame moves down again. This time aligning the object in between the grippers.

- Baxter’s arm then grasps the object.

- The arm moves back up to the “safe position”; the same position as step 4.

- Baxter now moves back to the home position. This step was added to ensure predictable behavior of the robot arm.

- Depending on the object’s classification, the arm will move to the appropriate bin. Baxter’s head will also display the recycling image.

- Once over the bin, Baxter opens its grippers and drops the object. To show that the object has been recycled, Baxter displays the bin image.

- Steps 3 through 11 are repeated for all objects found.

Team Members

- Yael Ben Shalom

- Chris Aretakis

- Jake Ketchum

- Mingqing Yuan